About

News and Events

Research and Programs

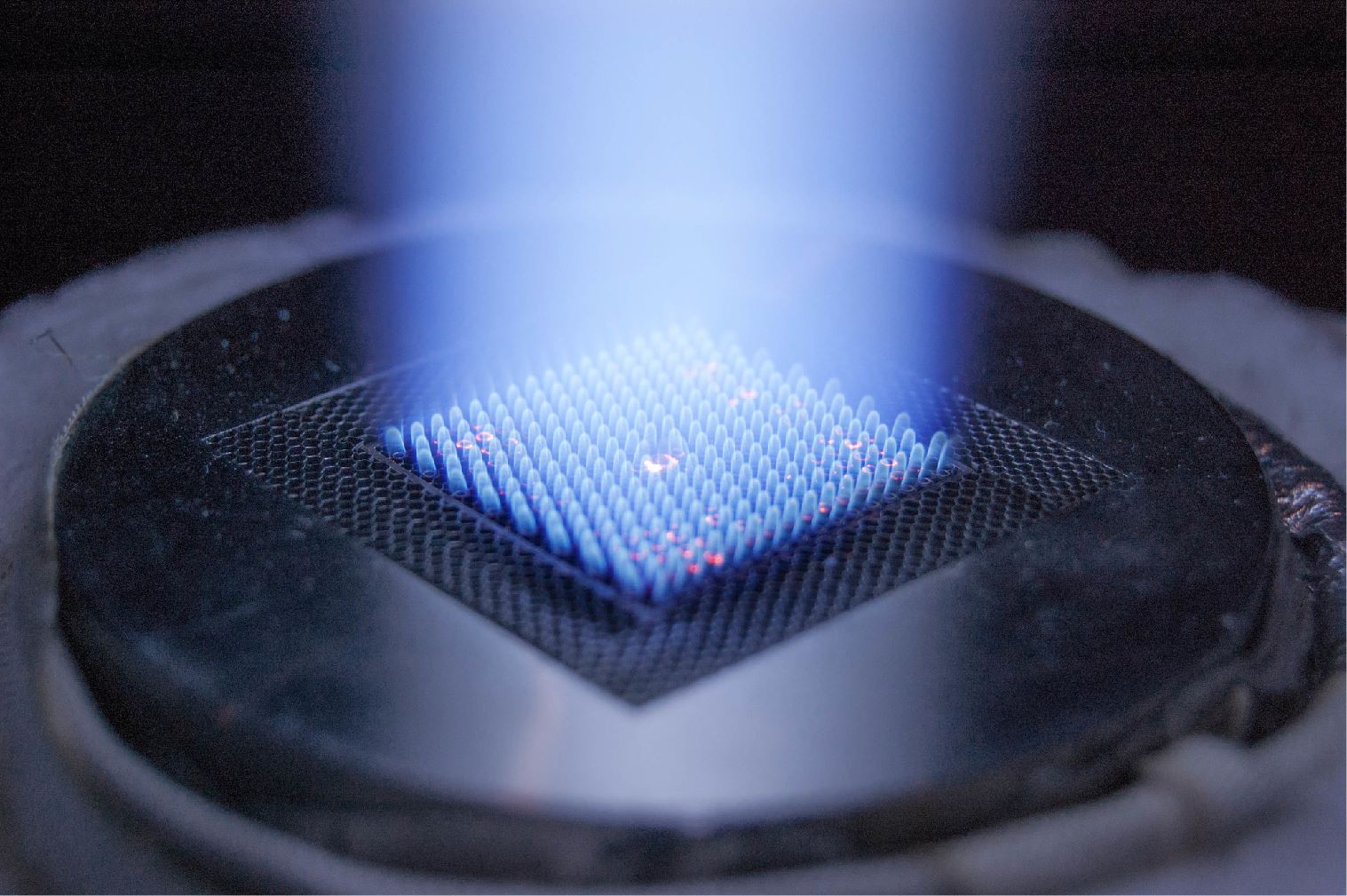

Featured Initiatives Advanced Alloy Signature Center (AASC) Center for Fuels and Chemicals (CFC) Center for Microwave Chemistry (CMC) Coal for High-Value Products Critical Minerals and Materials Natural Gas Science-based Artificial Intelligence and Machine Learning Institute (SAMI) Subsurface Science Turbine System Optimization

Major Fossil Energy Programs Carbon Management Resource Sustainability

Core Competencies Computational Science and Engineering Energy Conversion Engineering Geological and Environmental Systems Materials Engineering and Manufacturing Strategic Systems Analysis and Engineering Program Execution and Integration

Core Competencies Computational Science and Engineering Energy Conversion Engineering Geological and Environmental Systems Materials Engineering and Manufacturing Strategic Systems Analysis and Engineering Program Execution and Integration

Energy Technology Development Office of Critical Minerals and Energy Innovation Battery Workforce Initiative Office of Cybersecurity, Energy Security, and Emergency Response Office of Electricity

University Training & Research Historically Black Colleges and Universities and Minority Serving Institutions Program University Carbon Research Program

University Training & Research Historically Black Colleges and Universities and Minority Serving Institutions Program University Carbon Research Program

Business

Library

Explore our Library

Approved Categorical Exclusions Environmental Assessments Environmental Impact Statements Oil and Gas Projects Summaries NETL Fact Sheets Publication Search Energy Data Exchange (EDX) FECM External R&D Final Technical Reports Project Landing Page Summary Information for External R&D Awards NETL R&D Publication Search Peer Review Reports

- Research and Programs

- Featured Initiatives

- Core Competencies

- Core Competencies

- Energy Technology Development

- University Training & Research

-

- Business

- Technology Transfer

-

- Library

- Energy Analysis

-

- About

- News and Events

- Education